Breaking News

Powerful Pro-life Ad Set to Air During Super Bowl 'Adoption is an Option' (Video)

Powerful Pro-life Ad Set to Air During Super Bowl 'Adoption is an Option' (Video)

Even in Winter, the Sun Still Shines in These Citrus Recipes

Even in Winter, the Sun Still Shines in These Citrus Recipes

Dates: The Ancient Fertility Remedy Modern Medicine Ignores Amid Record Low Birth Rates

Dates: The Ancient Fertility Remedy Modern Medicine Ignores Amid Record Low Birth Rates

Amazon's $200 Billion Spending Shock Reveals Big Tech's Centralization Crisis

Amazon's $200 Billion Spending Shock Reveals Big Tech's Centralization Crisis

Top Tech News

How underwater 3D printing could soon transform maritime construction

How underwater 3D printing could soon transform maritime construction

Smart soldering iron packs a camera to show you what you're doing

Smart soldering iron packs a camera to show you what you're doing

Look, no hands: Flying umbrella follows user through the rain

Look, no hands: Flying umbrella follows user through the rain

Critical Linux Warning: 800,000 Devices Are EXPOSED

Critical Linux Warning: 800,000 Devices Are EXPOSED

'Brave New World': IVF Company's Eugenics Tool Lets Couples Pick 'Best' Baby, Di

'Brave New World': IVF Company's Eugenics Tool Lets Couples Pick 'Best' Baby, Di

The smartphone just fired a warning shot at the camera industry.

The smartphone just fired a warning shot at the camera industry.

A revolutionary breakthrough in dental science is changing how we fight tooth decay

A revolutionary breakthrough in dental science is changing how we fight tooth decay

Docan Energy "Panda": 32kWh for $2,530!

Docan Energy "Panda": 32kWh for $2,530!

Rugged phone with multi-day battery life doubles as a 1080p projector

Rugged phone with multi-day battery life doubles as a 1080p projector

4 Sisters Invent Electric Tractor with Mom and Dad and it's Selling in 5 Countries

4 Sisters Invent Electric Tractor with Mom and Dad and it's Selling in 5 Countries

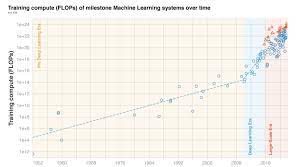

Three Eras of Machine Learning and Predicting the Future of AI

They show :

before 2010 training compute grew in line with Moore's law, doubling roughly every 20 months.

Deep Learning started in the early 2010s and the scaling of training compute has accelerated, doubling approximately every 6 months.

In late 2015, a new trend emerged as firms developed large-scale ML models with 10 to 100-fold larger requirements in training compute.

Based on these observations they split the history of compute in ML into three eras: the Pre Deep Learning Era, the Deep Learning Era and the Large-Scale Era . Overall, the work highlights the fast-growing compute requirements for training advanced ML systems.

They have detailed investigation into the compute demand of milestone ML models over time. They make the following contributions:

1. They curate a dataset of 123 milestone Machine Learning systems, annotated with the compute it took to train them.