Breaking News

Alaska Plots AI-Driven Digital Identity, Payments, and Biometric Data System

Alaska Plots AI-Driven Digital Identity, Payments, and Biometric Data System

Another TRILLION Dollar NDAA Military Spending Bill!

Another TRILLION Dollar NDAA Military Spending Bill!

The #1 Worst Protein in the World!

The #1 Worst Protein in the World!

Battleborn 12V Battery: Major Safety Issue

Battleborn 12V Battery: Major Safety Issue

Top Tech News

Build a Greenhouse HEATER that Lasts 10-15 DAYS!

Build a Greenhouse HEATER that Lasts 10-15 DAYS!

Look at the genius idea he came up with using this tank that nobody wanted

Look at the genius idea he came up with using this tank that nobody wanted

Latest Comet 3I Atlas Anomolies Like the Impossible 600,000 Mile Long Sunward Tail

Latest Comet 3I Atlas Anomolies Like the Impossible 600,000 Mile Long Sunward Tail

Tesla Just Opened Its Biggest Supercharger Station Ever--And It's Powered By Solar And Batteries

Tesla Just Opened Its Biggest Supercharger Station Ever--And It's Powered By Solar And Batteries

Your body already knows how to regrow limbs. We just haven't figured out how to turn it on yet.

Your body already knows how to regrow limbs. We just haven't figured out how to turn it on yet.

We've wiretapped the gut-brain hotline to decode signals driving disease

We've wiretapped the gut-brain hotline to decode signals driving disease

3D-printable concrete alternative hardens in three days, not four weeks

3D-printable concrete alternative hardens in three days, not four weeks

Could satellite-beaming planes and airships make SpaceX's Starlink obsolete?

Could satellite-beaming planes and airships make SpaceX's Starlink obsolete?

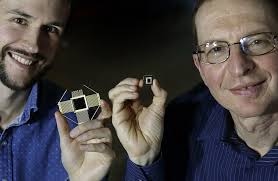

Breakthroughs in Neuromorphic Computing Could Speed Computers and AI By Ten Times

The new system is parallel programming of an ionic floating-gate memory array, which allows large amounts of information to be processed simultaneously in a single operation. The research is inspired by the human brain, where neurons and synapses are connected in a dense matrix and information is processed and stored at the same location.

Sandia researchers demonstrated the ability to adjust the strength of the synaptic connections in the array using parallel computing. This will allow computers to learn and process information at the point it is sensed, rather than being transferred to the cloud for computing, greatly improving speed and efficiency and reducing the amount of power used.

Through machine learning technology, mainstream digital applications can today recognize and understand complex patterns in data. For example, popular virtual assistants, such as Amazon.com Inc.'s Alexa or Apple Inc.'s Siri, sort through large streams of data to understand voice commands and improve over time.

With the dramatic expansion of machine learning algorithms in recent years, applications are now demanding larger amounts of data storage and power to complete these difficult tasks. Traditional digital computing architecture is not designed or optimized for artificial neural networks that are the essential part of machine learning.

First totally synthetic human brain model has been realized

First totally synthetic human brain model has been realized Mach-23 potato gun to shoot satellites into space

Mach-23 potato gun to shoot satellites into space