Breaking News

Part 4: Immigration Is Killing America: Here Are The Results Coming

2026-03-05 Ernest Hancock interviews Dr Phranq Tamburri (Trump Report) MP3 (MP4 to be loaded shortly

2026-03-05 Ernest Hancock interviews Dr Phranq Tamburri (Trump Report) MP3 (MP4 to be loaded shortly

S3E8: Your Money, Your Data, Your Blood, All Stolen

S3E8: Your Money, Your Data, Your Blood, All Stolen

The Pentagon is looking for the SpaceX of the ocean.

The Pentagon is looking for the SpaceX of the ocean.

Top Tech News

US particle accelerators turn nuclear waste into electricity, cut radioactive life by 99.7%

US particle accelerators turn nuclear waste into electricity, cut radioactive life by 99.7%

Blast Them: A Rutgers Scientist Uses Lasers to Kill Weeds

Blast Them: A Rutgers Scientist Uses Lasers to Kill Weeds

H100 GPUs that cost $40,000 new are now selling for around $6,000 on eBay, an 85% drop.

H100 GPUs that cost $40,000 new are now selling for around $6,000 on eBay, an 85% drop.

We finally know exactly why spider silk is stronger than steel.

We finally know exactly why spider silk is stronger than steel.

She ran out of options at 12. Then her own cells came back to save her.

She ran out of options at 12. Then her own cells came back to save her.

A cardiovascular revolution is silently unfolding in cardiac intervention labs.

A cardiovascular revolution is silently unfolding in cardiac intervention labs.

DARPA chooses two to develop insect-size robots for complex jobs like disaster relief...

DARPA chooses two to develop insect-size robots for complex jobs like disaster relief...

Multimaterial 3D printer builds fully functional electric motor from scratch in hours

Multimaterial 3D printer builds fully functional electric motor from scratch in hours

WindRunner: The largest cargo aircraft ever to be built, capable of carrying six Chinooks

WindRunner: The largest cargo aircraft ever to be built, capable of carrying six Chinooks

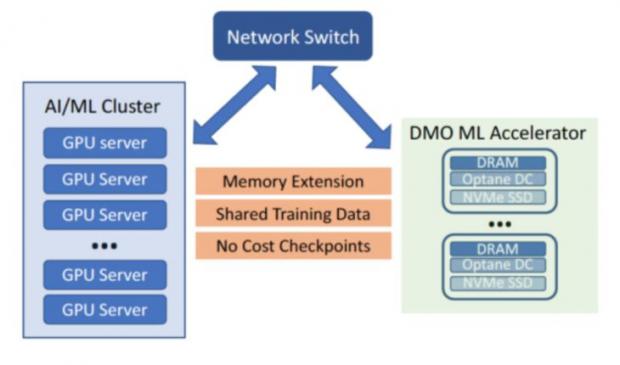

Beyond Big Data is Big Memory Computing for 100X Speed

This new category is sparking a revolution in data center architecture where all applications will run in memory. Until now, in-memory computing has been restricted to a select range of workloads due to the limited capacity and volatility of DRAM and the lack of software for high availability. Big Memory Computing is the combination of DRAM, persistent memory and Memory Machine software technologies, where the memory is abundant, persistent and highly available.

Transparent Memory Service

Scale-out to Big Memory configurations.

100x more than current memory.

No application changes.

Big Memory Machine Learning and AI

* The model and feature libaries today are often placed between DRAM and SSD due to insufficient DRAM capacity, causing slower performance

* MemVerge Memory Machine bring together the capacity of DRAM and PMEM of the cluster together, allowing the model and feature libraries to be all in memory.

* Transaction per second (TPS) can be increased 4X, while the latency of inference can be improved 100X

RNA Crop Spray: Should We Be Worried?

RNA Crop Spray: Should We Be Worried?