Breaking News

Nancy Pelosi has officially announced her RETIREMENT at the end of her term, January 3, 2027.

Nancy Pelosi has officially announced her RETIREMENT at the end of her term, January 3, 2027.

Omeed Malik: The Technocrat Muslim Billionaire Inside MAGA

Omeed Malik: The Technocrat Muslim Billionaire Inside MAGA

Democrat-led government shutdown is now causing flight delays, threatening air traffic control,...

Democrat-led government shutdown is now causing flight delays, threatening air traffic control,...

Top Tech News

HUGE 32kWh LiFePO4 DIY Battery w/ 628Ah Cells! 90 Minute Build

HUGE 32kWh LiFePO4 DIY Battery w/ 628Ah Cells! 90 Minute Build

What Has Bitcoin Become 17 Years After Satoshi Nakamoto Published The Whitepaper?

What Has Bitcoin Become 17 Years After Satoshi Nakamoto Published The Whitepaper?

Japan just injected artificial blood into a human. No blood type needed. No refrigeration.

Japan just injected artificial blood into a human. No blood type needed. No refrigeration.

The 6 Best LLM Tools To Run Models Locally

The 6 Best LLM Tools To Run Models Locally

Testing My First Sodium-Ion Solar Battery

Testing My First Sodium-Ion Solar Battery

A man once paralyzed from the waist down now stands on his own, not with machines or wires,...

A man once paralyzed from the waist down now stands on his own, not with machines or wires,...

Review: Thumb-sized thermal camera turns your phone into a smart tool

Review: Thumb-sized thermal camera turns your phone into a smart tool

Army To Bring Nuclear Microreactors To Its Bases By 2028

Army To Bring Nuclear Microreactors To Its Bases By 2028

Nissan Says It's On Track For Solid-State Batteries That Double EV Range By 2028

Nissan Says It's On Track For Solid-State Batteries That Double EV Range By 2028

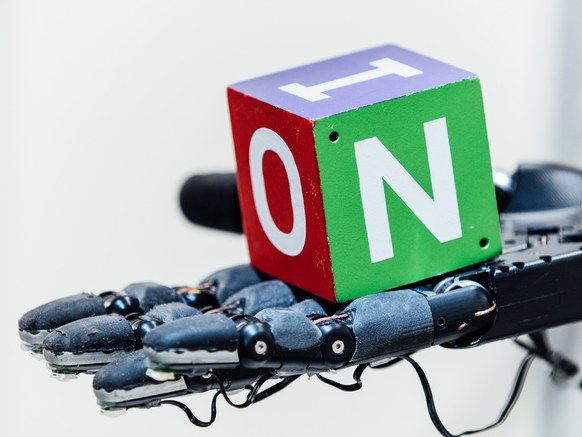

This Robot Hand Taught Itself How to Grab Stuff Like a Human

So he helped found a research nonprofit, OpenAI, to help cut a path to "safe" artificial general intelligence, as opposed to machines that pop our civilization like a pimple. Yes, Musk's very public fears may distract from other more real problems in AI. But OpenAI just took a big step toward robots that better integrate into our world by not, well, breaking everything they pick up.

OpenAI researchers have built a system in which a simulated robotic hand learns to manipulate a block through trial and error, then seamlessly transfers that knowledge to a robotic hand in the real world. Incredibly, the system ends up "inventing" characteristic grasps that humans already commonly use to handle objects. Not in a quest to pop us like pimples—to be clear.

The researchers' trick is a technique called reinforcement learning. In a simulation, a hand, powered by a neural network, is free to experiment with different ways to grasp and fiddle with a block. "It's just doing random things and failing miserably all the time," says OpenAI engineer Matthias Plappert. "Then what we do is we give it a reward whenever it does something that slightly moves it toward the goal it actually wants to achieve, which is rotating the block."

The Technocratic Dark State

The Technocratic Dark State Carbon based computers that run on iron

Carbon based computers that run on iron